Translating deep thinking into common sense

A Jaw-Dropping Theory of Consciousness

By Vinay Kolhatkar

October 23, 2018

SUBSCRIBE TO SAVVY STREET (It's Free)

“Language is an organ of perception, not simply a means of communication” ― Julian Jaynes

Gorillas are social animals that live in groups to help them survive. Males protect females and the offspring of the group. In a group of up to 30, only 1 to 4 are male adults. The rest are blackbacks (young males), adult females, and their offspring. All males get the silvery hair patch on their back when they reach adulthood, but only the strongest becomes the leader.

The “Silverback” leader makes decisions, resolves conflicts, produces offspring, and defines and defends the home area. He assumes an exclusive right to mate with the females in the group.

After reaching sexual maturity, both females and males often leave the group in which they were born to join another group. Males have to do it to avoid a conflict with the dominant leader over the females. Females leave to avoid the dominant male mating up with their female descendants—perhaps to prevent inbreeding!

Darwin’s majestic symphony is incomplete. Biology’s best answer today is that consciousness evolved by natural selection at some point, and that this faculty is an emergent property of complex organisms.

Evolution may have endowed them with species propagation instincts, besides individual survival.

And all this happens without a self-reflective consciousness or sign language much beyond shrieks and grunts.

In the Behavioral Ecology journal, Mark Moffett of the Smithsonian Institution describes two methods used by different organisms to identify the members of their societies. In individual recognition societies, says Moffett, each member has to recognize as an individual every other member of its society, which puts a load on memory. The gorillas identify their group by sight.

Social insects, though, have anonymous societies. Indeed, some ant societies are so large that many individuals never meet each other. They are bonded by shared “markers” such as hydrocarbon molecules they smell on one another. As long as an ant has the right scent, it will be accepted. Foreign ants have a different scent and are shunned or killed.

It’s easy enough to see that pre-linguistic hominids—upright apes with bigger brains and memories, would not have needed language to form small clans. But as the clans grew larger, language (estimated to be about 50,000 years old; estimates vary), was probably the key to holding them together. At some stage, there occurred an accelerated development in the ability to reason and conceptualize, and a self-reflective consciousness emerged.

But when? And how? Darwin’s majestic symphony is incomplete. Biology’s best answer today is that consciousness evolved by natural selection at some point, and that this faculty is an emergent property of complex organisms. Yet, neuroscientists, equipped with state-of-the-art imaging technology, have tried, in vain, to locate the neurological substrate of consciousness and explain its “emergence,” once and for all.

The cluster of neurons that go to sleep by a blow to the head or by an anesthetist’s injection isn’t the Holy Grail of Consciousness, for the issue we are about to address needs redefining.

In that introspective mind space, a constructed analog of the self, the “I,” narrates to itself and moves around—contemplating, losing focus, refocusing, constructing representations of actual, historic, and potential realities and its relativity to us and other human beings.

By consciousness, we mean an introspective mind space in which the thinker not only recognizes his or her selfhood, but has access to an autobiographical, episodic memory, an ability to carry on inner speech, to ponder an action, to override instincts, to deceive or lie, and to change one’s mind. All this requires a representation of the external world and our place in it, in the past and the present, plus an ability to envision a future world with us in it.

In that introspective mind space, a constructed analog of the self, the “I,” narrates to itself and moves around—contemplating, losing focus, refocusing, constructing representations of actual, historic, and potential realities and its relativity to us and other human beings.

The Radical Theories of Julian Jaynes

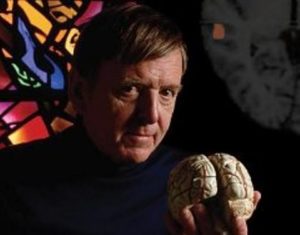

Princeton University psychologist Julian Jaynes (1920-1997) spent his entire life trying to unlock the consciousness riddle—how did it evolve? And, within a lifespan, what gives rise to it?

Princeton University psychologist Julian Jaynes (1920-1997) spent his entire life trying to unlock the consciousness riddle—how did it evolve? And, within a lifespan, what gives rise to it?

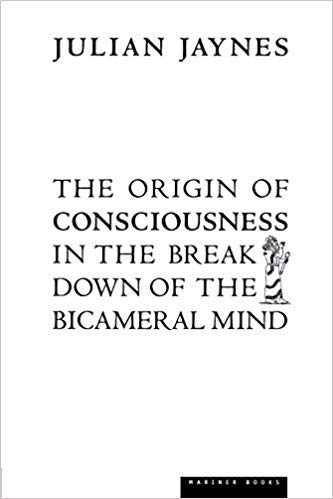

In 1976, Jaynes published his answers in “The Origin of Consciousness in the Breakdown of the Bicameral Mind” (OC), outlining four independent but interrelated hypotheses.

In OC, he comes across as an assiduous, multidisciplinary researcher, cautious to a fault when traversing archaeological terrain, but a maverick theorizer, turning every convention on its head.

Hence the reception of celebrated scientist Richard Dawkins is representative of many a reader reaction: “… either complete rubbish or a work of consummate genius, nothing in between …”

Nevertheless, Jaynes’s creativity was undeniable—eloquently summarized thus by David Stove (1927-1994), Professor of Philosophy, University of Sydney: “The weight of original thought in it [OC] is so great that it makes me uneasy for the author’s well-being: the human mind is not built to support such a burden.” Despite that, at first, much of academia ignored OC.

Jaynes, theorist sublime of the human pre-conscious zombie, had risen from academe’s graveyard, as controversial as ever. Scientific curiosity in his theories rekindled.

In January 2007, though, an intended “accessible re-introduction”—“Reflections on the Dawn of Consciousness: Julian Jaynes’s Bicameral Mind Theory Revisited (DC)” was published, containing a number of independent, mostly-positive, critiques by philosophers, neuroscientists, anthropologists, psychologists, and psycholinguists. When, in June 2013, the Julian Jaynes Society (JJS) held a conference in Charleston, West Virginia exclusively dedicated to Jaynes’s theories of consciousness, it featured 26 scholars from a wide range of fields over three full days.

Jaynes, theorist sublime of the human pre-conscious zombie, had risen from academe’s graveyard, as controversial as ever. Scientific curiosity in his theories rekindled.

The Four Interrelated Hypotheses of the Theory

I. Introspective mind space (IMS) [This is what Jaynes calls consciousness] is a “learned process based on metaphorical language” [emphasis mine]. It doesn’t have a material substrate in the brain.

II. Human functioning prior to the advent of IMS: The two chambers of the brain operated in a “bicameral” way in that decisions, required in times when instincts failed, were experienced as a compelling, internally audible command. The instruction would have arisen as a result of edicts stored in the subconscious early in life but was not accessible to the person as a conscience or “inner voice.” This voice was attributed to “the gods.” Such a bicameral mentality itself came into being after language developed, and as human groups became kingdom-civilizations unable to rely on small groups controlling social interactions via sight. Jaynes opines that IMS arose when the bicameral mentality broke down under extreme conditions of famine, wars, and devastation whereby preset rules could no longer guide the human. IMS proved superior for survival and edged out bicameral functioning primarily via culture’s effects on language.

III. IMS arose as a relatively new development in human history, most likely only 3-5,000 years ago, first in Mesopotamia and then in Ancient Greece.

IV. The neurological model of the human brain: Jaynes proposed, decades before modern neuroimaging confirmed his hypothesis, that auditory hallucinations would be generated by the language areas in the right hemisphere and processed by the left. This prediction has now been verified by numerous studies. The right hemisphere is attuned to chanting, music, verse, lullabies etc. which excite electrical activity in the brain whereas logic and language are processed primarily in the left hemisphere (in certain cases such as injury, neuroplasticity facilitates functional changes but we will set that aside here).

It’s the dating (III above) that’s by far the most controversial. And we would be wise not to heed Dawkins’s counsel—‘all rubbish or the work of consummate genius’. Not only are the hypotheses independent, hypothesis I is both testable and has profound implications, and IV is no longer even controversial. It’s not all or nothing. Let’s look at II (the hypothesized mentality of ancient humans), then I (what enables introspection), and then III.

Hypothesis II, that ancient human mentality consisted of obedience to an auto-generated command issued by the right hemisphere to the left, has an astonishing explanatory power over a wide range of cultural realities that are still ubiquitous today.

Hypothesis II—the Bicameral Mentality

Say I’m cruising around in Hollywood, my mind drifting onto other matters—yesterday’s job interview, a roof that needs raking, and discussing the narrative of a much-acclaimed David Lynch psychological-thriller movie with a companion.

Suddenly, the sight of a traffic jam ahead reorients my consciousness. And my companion’s.

“Oh wait …a left here, and we can take a detour via Mullholland Drive,” she laughs.

“The same thought crossed my mind, too.” I marvel at the coincidence, tongue in cheek.

If we time-travelled a Bicameral to take my place, an inner voice, audible, but only to him, would command him to take a left. The right hemisphere would produce the instruction. The speech-processing left brain would “hear” the “voice.” The voice would feel compelling, as though his own, personal “god” was telling him what to do. Leave aside the pun, the Bicameral wouldn’t even recognize this voice as his own.

And so it was, for thousands of years, as rulers of men—kings, tribal elders, and older relatives, conditioned to become the Silverbacks, found their decrees engraved into the subconscious of those ruled, surfacing as audible directives when needed. The edicts were only used to cope with unusual situations, the rest of the time the “human” was on instinctive autopilot. The Bicamerals believed that their dead ancestors continued to guide them, leading to elaborate burial rituals and attempts at preserving the body after death. And, as the voices became attributed to multiple gods in the sky, our bicameral cousins constructed idols from wood and stone and clay to worship and adorn. And, understandably, dynasties who presided over a theocracy lasted long—religion was the stability drawcard in a politico-religious complex.

Did a bicameral mentality ever exist?

Jaynes’s excavation in support of his theory through the sands of time is extensive. The JJS also cites many other relevant archaeological and anthropological studies.

But surely then, we might expect some vestiges of the bicameral mentality today?

And here Jaynes turns us toward the sweeping prevalence on the planet of previously unexplained phenomena like hypnotism (where subjects obey a “voice”), the hearing of voices attributed to the label schizophrenia, auditory and visual hallucinations of normal people under extreme stress, idolatry even amongst the highly educated in certain countries, ancestral worship among remote tribes, the heralding of oracles in history (as humankind transitioned, those that continued to hear “voices” were hailed as mediums to divinity), Tourette’s syndrome, children with imaginary playmates, and the physiological changes that accompany spirit possession and speaking in tongues. (Warning: the last hyperlinked video is particularly tough to watch).

But Jaynes doesn’t stop there. The rise of monotheistic religion itself may have been answering a cry of Existentialist despair. As a self-reflective consciousness/IMS arose, the two hemispheres integrated, the voices stopped, and the anguish began as humans were without authoritative commands when instinct failed to guide them, and yet in full knowledge that their own choices had consequences. The answer was—first, to create a framework for self-reliance attributing it to one god, with the high priests as the medium, and then, when the priests faltered, to place the holy word in people’s hands for a direct communion with God.

As recently as 2014, Olga Khazan was reporting in The Atlantic (“When Hearing Voices Is a Good Thing”) the work of Stanford anthropologist Tanya Luhrmann, who concluded: “Our work found that people with serious psychotic disorders in different cultures have different voice-hearing experiences.” Khazan reports that schizophrenic people from collectivist cultures don’t hear the same vicious, dark voices that Americans do. Some of them, in fact, think their hallucinations are good or even magical. Their doctors support them, which may explain why they do not become antisocial or violent.

When a survey in the early 1990s found that 10-15% of the U.S. population experienced vivid sensory hallucinations at some point in their lives, scientists began to take seriously the idea that voice hearing and other forms of auditory hallucination can be benign or nonclinical—and “that auditory hallucinations are the result of the mind failing to brand its actions as its own.”

Hypothesis I—the How

In the conventional measure of evolutionary time, 4,000 years is but a half-hour. But if IMS is learned, it’s also a skill. If we time-traveled a newborn from bicameral times and it grew up in a normal household today, most likely it would attain a self-reflective consciousness (see the Persinger quote below, perhaps some genome changes have occurred). And a time-travelled newborn going back thousands of years may never attain it.

Philosopher Daniel Dennett likens the Jaynesian theory to having only your software upgraded (as against a change in the neural hardware), which, he contends, can sometimes make discontinuous leaps of improvement.

And, in a nod to the growing field of gene-culture coevolution, neuroscientist Michael Persinger notes: “There have been approximately 15 million changes in our species’ genome since our common ancestor with the chimpanzee. There are human accelerated regions in the genome with genes known to be involved in transcriptional regulation and neurodevelopment. They are expressed within brain structures that would have allowed precisely the types of phenomena that Jaynes predicted had occurred around 3,500 years ago. Related genes, attributed to religious beliefs, are found on the same chromosome (for example, chromosome 10) as propensities for specific forms of epilepsy (partial, with auditory features) and schizophrenia. …”

The hypothesis that a self-reflective consciousness is not a permanent biological outcome but arises lexically and culturally, is not unique to Jaynes.

The hypothesis that a self-reflective consciousness is not a permanent biological outcome but arises lexically and culturally, is not unique to Jaynes. Other scholars before and after Jaynes, such as Russian psychologist Vygotsky, have championed the thesis that IMS is created lexically (refer Ch.6 in DC, and “Language Required for Consciousness” on the JJS site). Philosopher Christopher Gauker (University of Cincinnati) also holds that language is a prerequisite to all conceptual thought, indeed, he contends that it is the means by which conceptual thought becomes possible.

Toddlers come to realize the conceptualization of the “I” in the verbal exchanges: What did you do today, Johnny? How do you feel, Jane? Do you remember when we were at the beach and we saw …? Further, even deaf individuals taught a sign language reported a dramatic change in consciousness. (Ch. 6, DC)

Hypothesis III—the When

In an astounding challenge to established wisdom, however, Jaynes claims explicitly, chapter by chapter, that consciousness (or what we have called an introspective mind space) is not required for thinking, learning, and reasoning.

“Consciousness is a much smaller part of our mental life than we are conscious of, because we cannot be conscious of what we are not conscious of.” Julian Jaynes (OC Book I, Ch. 1)

It’s true that sometimes conscious thinking slows us down. John Limber (University of New Hampshire) recalls how his daughter was unbeatable at the Concentration game (a stack of playing cards is shown briefly and then put face down and the game is to remember which card is where) when she was two, but much poorer as she got older and developed IMS (Ch.6, DC).

But it’s that brazen premise—the delinking of reason from introspective mind space that allows Jaynes to date the dawn of consciousness in humans after agriculture and the earliest pyramids and forms of writing.

The detective in Jaynes is undaunted by where the trail leads, and relentlessly follows the evidence (in literature, anthropology, and archaeology) to infer the dating. e.g. The Iliad, and several other texts of that era suggest a Jaynesian bicameral, voice-of-god approach to life, sans an “I” doer but present the “me” to whom things are done to, whereas in The Odyssey, the analog “I” is awakened and the protagonist frequently consults himself. Homer, Jaynes opines, either never existed or, did so only as a narrator (but not a scribe) of only a part of actual history, not fiction (The Iliad)—the textual inconsistency suggests to scholars that the text was written over long periods of time by multiple authors.

A similar dichotomy, from “the Gods told me to do this” to “what shall I do?” exists between the older and newer versions (a time spread of centuries) of the Old Testament says Rabbi James Cohn in “Why the Bible is a written timeline of the dawn of consciousness” (a lecture) and also as he conjectures on the cultural evolution of human consciousness (an interview).

Indeed, Jaynes attributes the message in the Book of Genesis’s “Fall of Man,” (from a state of innocent obedience to God to a state of guilty disobedience) to a longing for God’s voice. Jesus, he says, was trying to help men to adapt to a new, self-reliant way of thinking.

At Stake Is Humanity’s Paradox

We live in paradoxical times.

Today, in the 21st Century, the poorest parts of the world have never been richer in every respect, relative to their own history. Significantly so. And the richer parts of the world are also better off, in wealth, longevity, access to preventive and curative medicine, freedom from wars and violence, than ever before.

Yet, as a whole, the human species continues to suffer varying levels of angst, frustration, worry, drug addiction, even depression, as though the mastery of harnessing nature and increasing palliative relief and cure from disease has not bettered human lives. Even in the West, and indeed, globally, suicide rates have actually increased, as existentialist, soul-suppressing philosophies have taken root in its culture.

Modern humans have:

- A recognition of a continuous identity (for coherence);

- An autobiographical memory of significant personal events set in linear time;

- A personality, which includes traits, attributes, dispositions—some genetic, some self-created, and standing orders (or the ethic) as a guide to choices;

- A reflective self-consciousness, which is, in effect, an awareness of the fact that we are aware of ourselves and our actions, and thus can contemplate their aftereffects;

And, while humans are not born with a blank slate (tabula rasa) in that they have genetic instincts, needs, and potentialities, they have:

- An ability to override or withstand instincts with deliberations (short-term free will);

- Neuroplasticity, the ability of our mind to rewire our brain, physically and functionally, throughout our life. This rewiring includes the ability to affect the subconscious and change the standing orders the brain feeds the mind, i.e., long-term free will; and

- The ability, sometimes accompanied by a desire, to create a non-genetic legacy.

Let’s entertain the possibility that anatomically modern humans, which biology calls Homo sapiens, and which paleontology contends arose 160,000 years ago, could not introspect. If a time machine transported adult humans of 50,000 years ago to current times, they could probably reproduce with modern humans and produce offspring.

A subspecies, characterized by some differing traits, typically evolves in an isolated geographic area, but remains the same species—it can reproduce within itself and by mating with the rest of the species. If Jaynes is right, metaphorically, if not genetically, we are a new subspecies, separated by time rather than geography. Our subspecies is big-brain hominids (or Homo sapiens) with an introspective mind space.

We need an efficient and effective introspective mind space that minimizes mental illness, frustration, anxiety, depression, and melancholia, and creates a better foundation for a serenely happy, flourishing, productive self. For that we need a complete theory of the mind, which cannot ignore the possibility of a lexical creation of a self-reflective consciousness, nor the likelihood of its tortured historical journey to self-emancipation.

A Side Bar on Anthropology and Political Correctness

Perhaps we may find footprints of bicameralism in remote and ancient cultures (see Vestiges of Bicameralism in Pre-Literate Societies). Unfortunately, when a professor (Brian McVeigh) decided to supplement his fieldwork in a remote Japanese religious community by employing Jaynes’s theory, Princeton’s Department of Anthropology Dissertation Committee dismissed their distinguished alumnus’s ideas as loony and called his work “ethnocentric” (i.e. arising from a belief in the inherent superiority of one’s own ethnic group or culture).

But in OC, Jaynes states that he doesn’t know why more people reported having experienced hallucinations in Russia and Brazil than in the U.K. (in one study). Jaynes does ask, however, how the great Inca empire was “captured by a small band of 150 Spaniards” in 1532. He answers his own question: “… not subjectively conscious, unable to deceive or to narrativize out the deception of others, the Inca and his lords were captured like helpless automatons.”

A Footnote for Objectivists

Julian Jaynes and philosopher Ayn Rand never met, it seems. Rand died in 1982, six years after Jaynes published his magnum opus (OC).

Rand had a certain level of discomfort with Darwin’s theory of evolution—the most crucial aspect of the human organism—volitional consciousness arising as an ability to model and conceptualize reality—had not been explained or marked in the timespan of biological history.

In her journal entries, Rand speculated: “We may still be in evolution, as a species, and living side by side with some ‘missing links.’ We do not know to what extent the majority of men are now rational.” Perhaps her instincts could be proven prescient in the timeline of science.

Jaynes would have given Rand one explanation of why the anti-conceptual mentality persists and for why consciousness is volitional—it’s a learned skill. The longing for mystic solutions, for the commanding voices of gods and kings—these are imprints of the bicameral mentality from before the cognitive revolution in Ancient Greece.

I suspect the two great thinkers would have gotten along. Jaynes had made a valiant attempt at finishing Darwin’s symphony and would have furnished Rand with a hypothesis of a “missing link” and a theory for the residues of the bicameral mentality she saw all around her.

And Jaynes, burrowed deep in ancient texts, perhaps never read Anthem, a dystopian novella of a totalitarian future in which the word “I” had vanished (“Yet again?” Jaynes may have remarked). Perhaps he would have loved the novella, placing it back in time, prior to Ancient Greece.

He might have highlighted this quote:

“And now I see the face of god, and I raise this god over the earth, this god whom men have sought since men came into being, this god who will grant them joy and peace and pride.

This god, this one word: ‘I.’”

- Ayn Rand, Anthem

This essay benefited from comments made on earlier drafts by Donna Paris, Walter Donway, Marcel Kuijsten, and Marsha Familaro Enright. The author would like to thank them all.